Brokk: Under the Hood

Brokk is an open source IDE (github here) focused on empowering humans to supervise AI coders rather than writing code the slow way, by hand. So Brokk focuses not on tab-completion but on context management to enable long-form coding in English.

Here is a look at some of the tech that makes Brokk so effective.

Quick Context: JLama, MiniLM-L6-v2, and Gemini 2.0 Flash Lite

Brokk provides Quick Context suggestions (in blue) as you dictate or type your instructions:

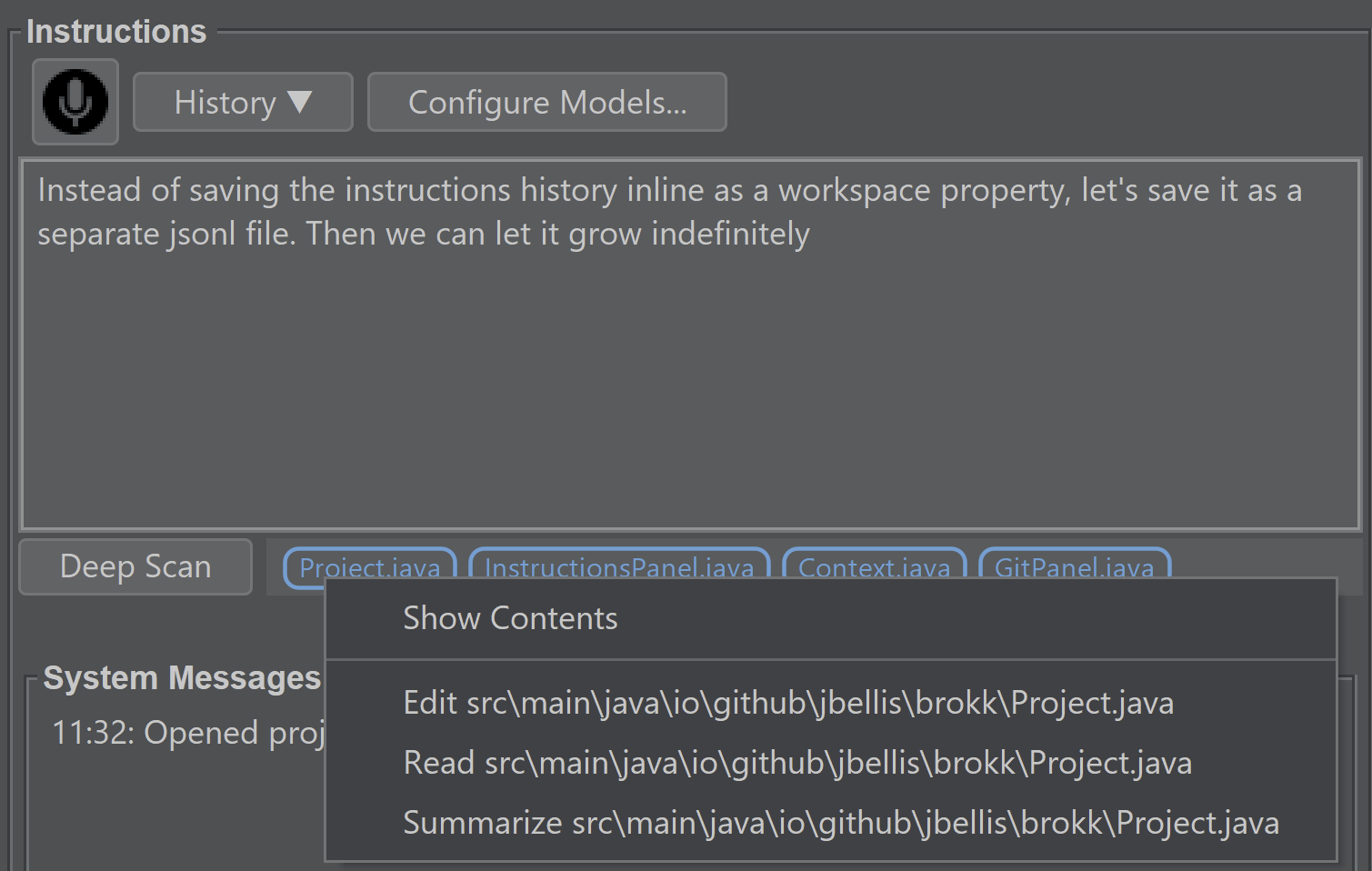

Right-clicking on the suggestions lets you add them to the Workspace.

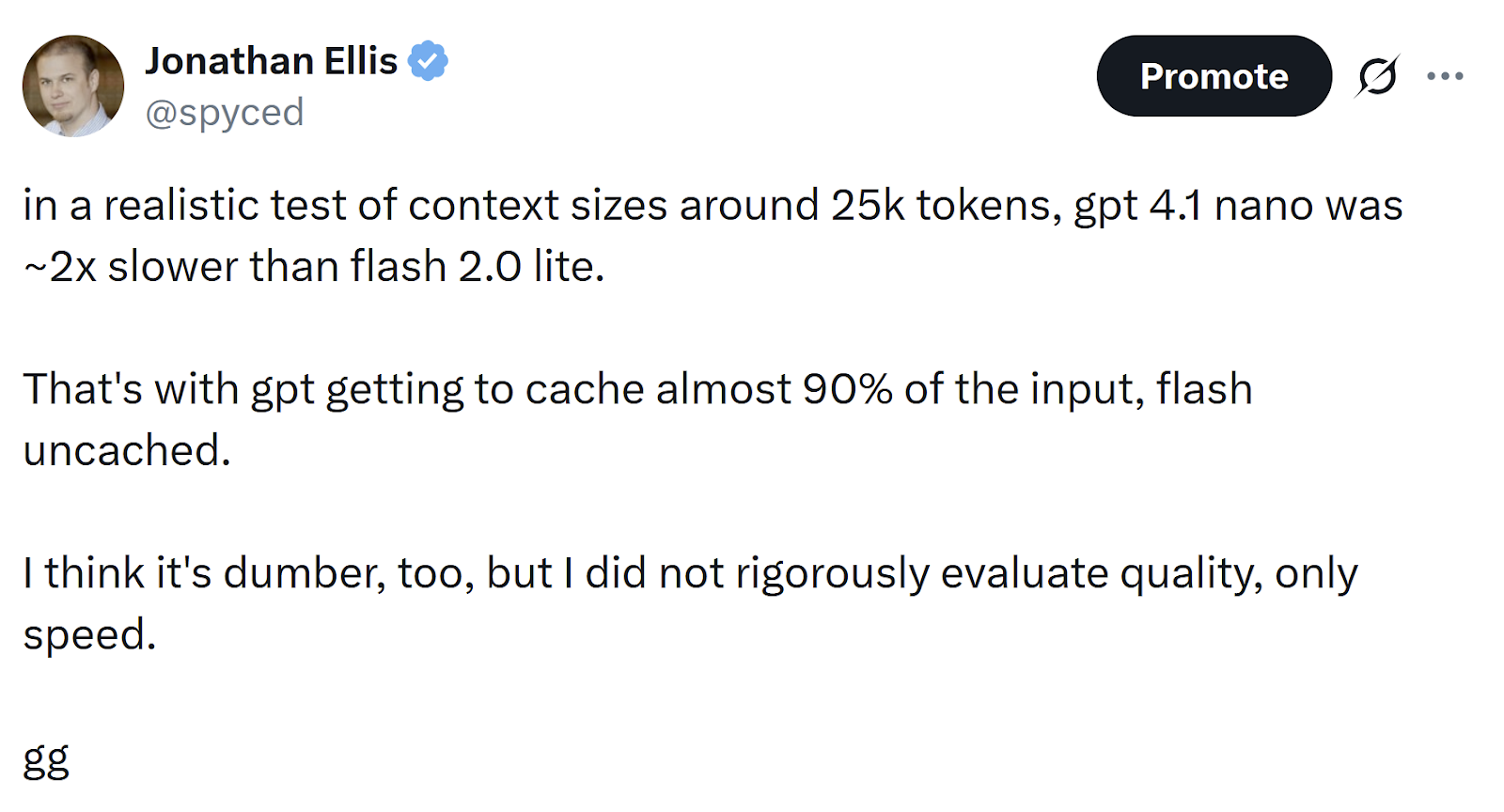

To minimize latency, we use Flash 2.0 Lite. We tested GPT 4.1 nano as well, but it was significantly slower:

400ms is about the 90th percentile for debouncing typing. That is, in a controlled transcription scenario, if you haven’t seen any keystrokes in 400ms, there’s a 90% chance that the user is done typing for now. But this doesn’t seem to match how programmers work, which is closer to: type a few words, think, type a few more words. So a 400ms debounce left us with enough calls to flash-lite that even with just one engineer testing it, the traffic got our project auto-blacklisted by Gemini after a few days. (At least, we think that’s what happened; it’s impossible to tell for sure.) And a longer debounce adds enough latency that it’s not a good user experience.

Enter JLama, an inference engine like llama.cpp, but for Java.

Brokk uses JLama to generate semantic embeddings of the instructions using the MiniLM-L6-v2 embeddings model. We picked this model because it’s small and fast, even on CPU. So when your instructions change now, Brokk can ask JLama “Is this new version semantically distinct from the last one we got suggestions for” before making any LLM requests.

Deep Scan and Agentic Search: Brute Force and Tool Calls

Deep Scan and Search are two different ways to find relevant context for your task.

Deep Scan is doing a similar job as Quick Context: given task instructions and the Workspace contents, suggest additional files that will be needed to accomplish the task. In both cases, this is done with brute force: we give the LLM the entire summarized project, or as much as will fit in its context, and ask it to decide based on that.

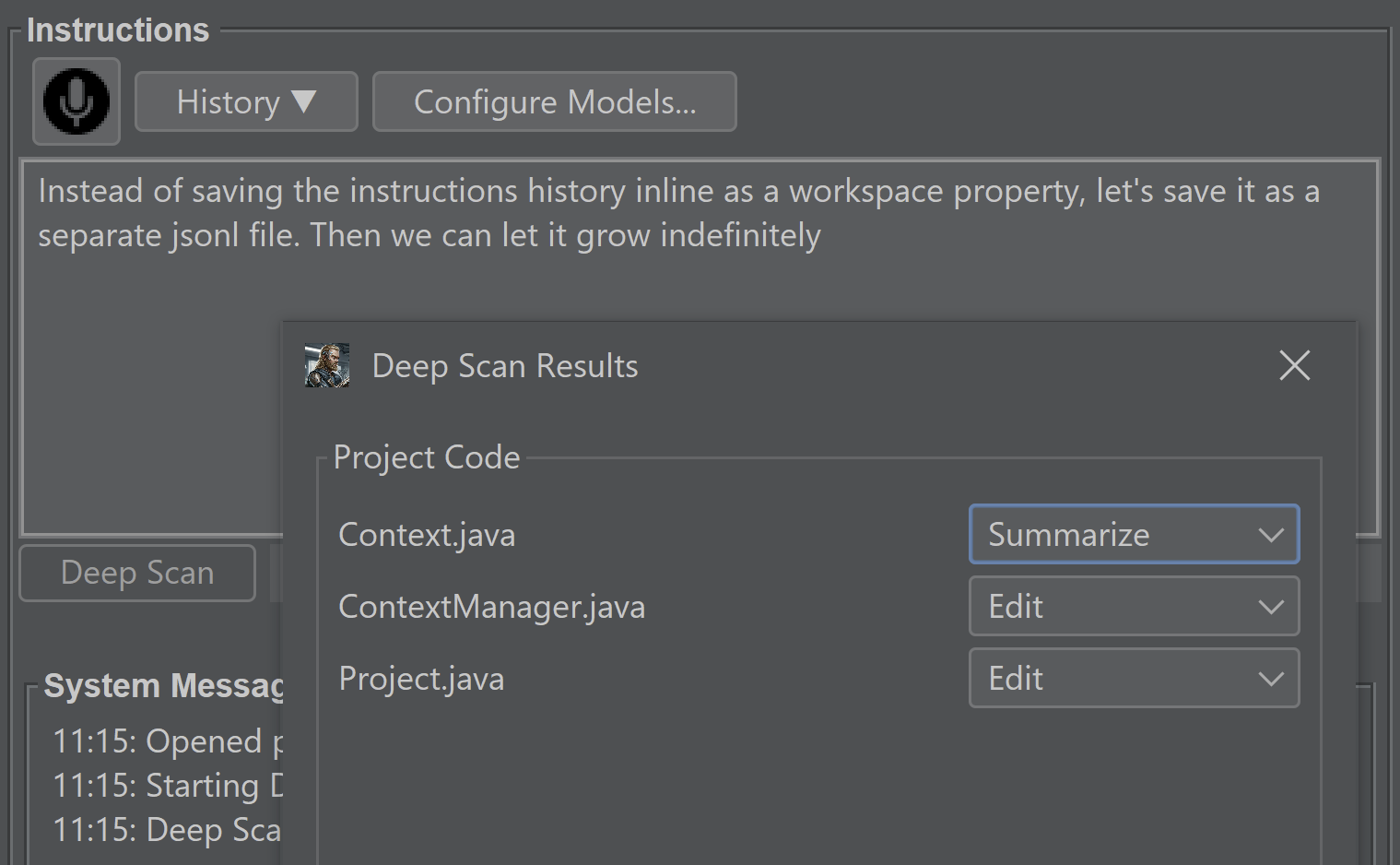

There are important differences. Deep Scan uses the Edit model, a smarter (but slower) LLM. Since it takes several seconds, we only run it when the user explicitly asks for it. You can see from the above example that Deep Scan’s recommendations are materially better: even though the text mentions “instructions,” InstructionsPanel (suggested by Quick Context) is not actually relevant to the task and Deep Scan leaves it out.

The other main difference is that instead of just surfacing potentially relevant files and leaving it up to the user what to do with them, Deep Scan makes specific recommendations as to whether the task requires editing the file or whether it can be summarized instead.

But Deep Scan and Quick Context are both single-turn inference: we give the model some context and ask it to make a recommendation and that’s it, we’re done. Often, this is completely adequate to allow you to proceed straight to coding, instead of iterating on a plan first through the Ask action.

But sometimes a single turn isn’t enough, either because the task is specific to details that are elided by the summarization process, or because your project is too large to fit even summaries into Gemini’s context, or just because the model wasn’t smart enough to see the right connections.

That’s when Search shines. Search gives the LLM access to (roughly) the same tools to explore the code that Brokk exposes in its UI, and lets the model use them to find the asked-for information in your codebase across multiple turns. This is much slower! but the results are astonishingly good. For example, here’s Brokk’s explanation of how bm25 search works in Cassandra.

Code Intelligence: Joern, with a side of Tree-sitter

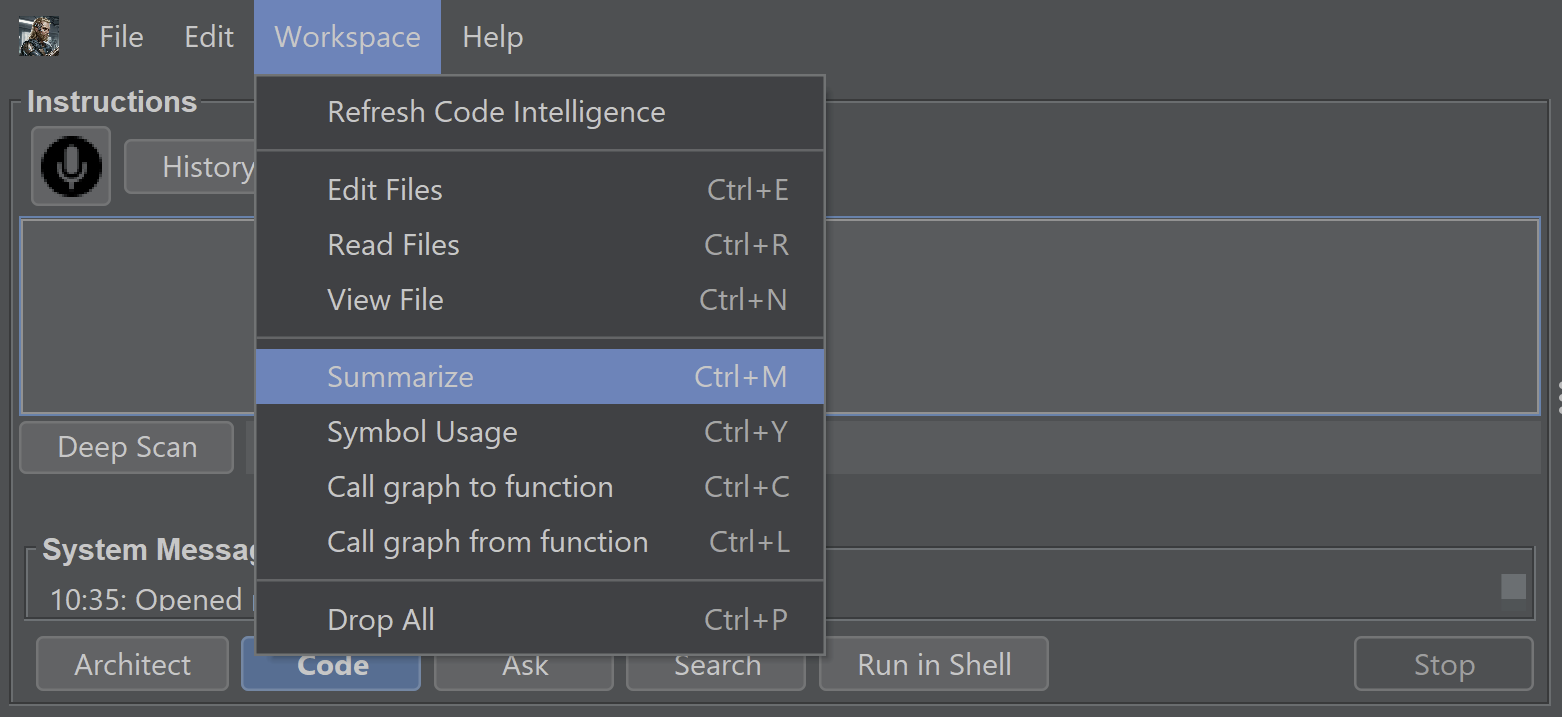

Everything Brokk can add to the Workspace, besides bare files, depends on its Code Intelligence engine.

We spent a lot of time evaluating options to power that engine, and the best that we found was Joern. None of the other projects met all of our criteria:

- Fully OSS (rules out CodeQL, SciTools, SonarSource)

- Works without special build integration (rules out SCIP)

- Offers type inference, not just AST (rules out Semgrep, Tree Sitter)

- Fast enough to compute the call graph for million-line projects in single-digit minutes (rules out LSP)

This was a little disappointing, especially since my preferred environment in which to write client-side code is Python and Joern is firmly JVM-based. (In Scala, no less!) But the upside of building Brokk in Java has been real: all kinds of things are easier when you’re working in an environment with real multithreading, and each thread executes ~100x faster than native Python.

The main downside to Joern is that while it supports most of today’s popular languages reasonably well (with Rust being the main exception), it doesn’t give you as uniform of an API when dealing with them as you’d expect. So extending Brokk’s support past Java is moving slower than we initially hoped.

As a stopgap, Brokk 0.9 adds Tree Sitter to offer partial support to non-Java languages, initially Python, Javascript, and C Sharp. “Partial support” specifically means summarization: this is the most critical element, since it reduces the amount of tokens required to teach the LLM about your APIs by roughly 10x.

Shout out to the author of the tree-sitter-ng Java wrapper for Tree-sitter: of the four Java Tree-sitter wrappers we evaluated, this is the only one that actually worked.

Wrapping Up

Brokk is designed to keep you in command while the AI handles the tactical details of editing and syntax. JLama keeps suggestions snappy, Joern provides insight into how everything fits together, and the whole thing stays open so you can audit, fork, or extend as you like. None of these pieces alone is magic, but together they let Brokk tackle the unglamorous million-line reality of enterprise codebases–not just demos and greenfield projects. If that sounds useful, try it now.