Massive Refactors in Minutes

We set out to compare the performance of our AI-assisted development tool, Brokk, against Cursor, a well-known AI coding assistant.

How Brokk Handles Massive Refactors in Minutes, Not Hours

In a recent evaluation, we set out to compare the performance of our AI-assisted development tool, Brokk, against Cursor, a well-known AI coding assistant. The goal is to evaluate which tool is better suited for large-scale refactoring in a real-world codebase, specifically preparing a large Java project for Null Away, a popular static analysis tool for detecting nullability issues, by adding the appropriate @Nullable annotations across all classes.

The Prompt

Both tools were given the same task:

"Prepare every class in this project for enabling Null Away by adding appropriate @Nullable annotations."

This task is non-trivial. It requires understanding variable lifecycles, method contracts, and inter-class dependencies in order to annotate only where necessary, without over- or under-applying @Nullable.

The Results

- Brokk completed the task in 1 minute and 55 seconds, producing consistent, project-wide annotations.

- Cursor, in contrast, was still working after 12 minutes and had completed only about 20% of the annotation process. Extrapolated over the full codebase, Cursor would have likely taken close to 60 minutes to finish.

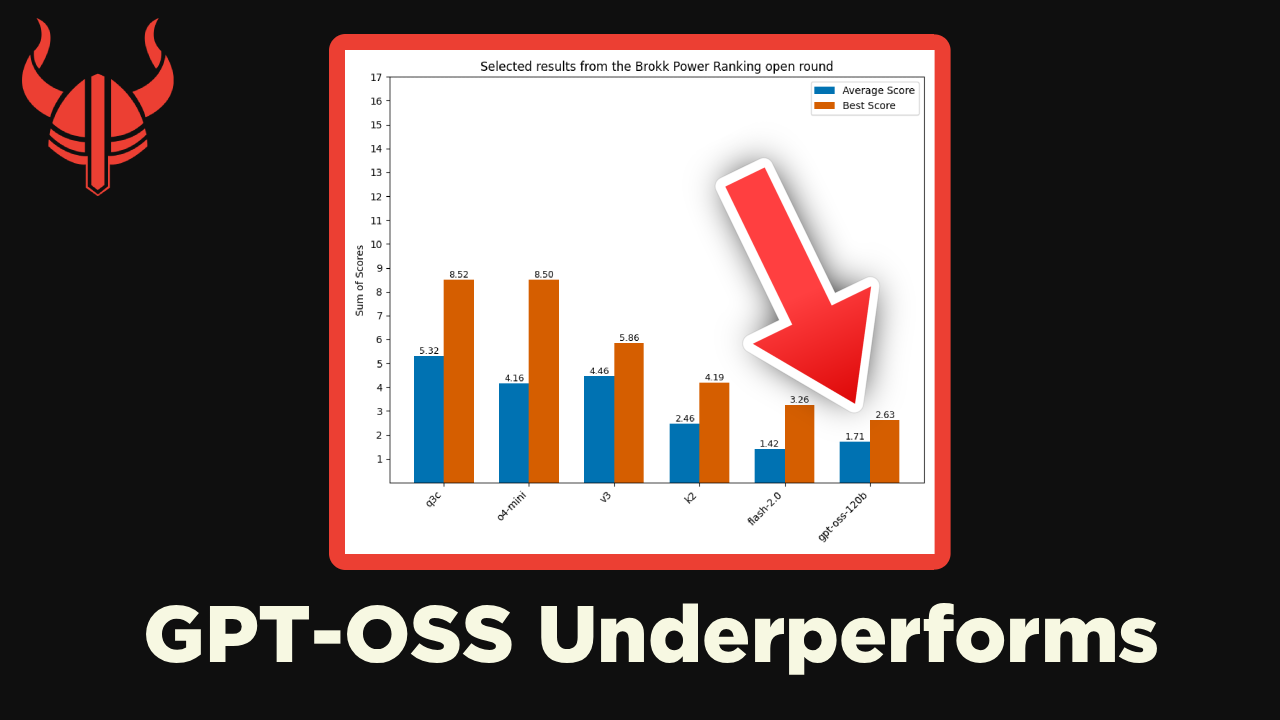

Model Comparison

This difference in model requirements is largely due to BlitzForge, a tool within Brokk that optimizes how tasks are distributed. BlitzForge processes the refactor file-by-file, rather than attempting to load and reason about the entire project at once. This approach significantly reduces cognitive overhead, allowing Brokk to offload much of the complexity to orchestration logic instead of requiring the model to maintain large-scale project context in memory. As a result, Brokk can rely on smaller, faster, and cheaper models while still delivering accurate and scalable transformations.

Here's our video on the BlitzForge tool within Brokk:

BlitzForge

This article from Medium breaks down why LLM performance often degrades as the size of the input context grows:

“Context rot is the slow decay of model performance as input length increases. It’s not a failure of scale. It’s a failure of relevance.”

The article explains that as the context window expands, models struggle to prioritize the right information. Instead of getting smarter with more data, they often lose focus, introducing hallucinations, inconsistencies, or irrelevant completions. This degradation becomes especially noticeable in tasks that require precise, high-signal reasoning across long inputs—exactly the kind of task large-scale refactors demand.

This thread on X from @nrehiew also explains context rot in more detail by going over a recent report from Chroma Research

Some notes on the Context Rot research.

— wh (@nrehiew_) July 31, 2025

I think there are pretty big/validated implications on several use cases and how we should think about these models. pic.twitter.com/DUJBop3XZs

An additional finding emerged during testing: Cursor was only able to make progress using Gemini Pro 2.5, one of the more advanced and resource-intensive models. When attempted with other models, Cursor was unable to even begin solving the task.

By contrast, Brokk completed the entire job on the first attempt using the much more affordable Flash 2.5 model. No retries were needed, and performance remained stable throughout.

At the end of the video we filmed for Cursor, it refactored only 41 out of 217 files. Considering Cursor’s newly implemented pricing model, completing the full task at this rate consumes a massive portion, or potentially all, of your monthly usage limit. This raises practical concerns for teams relying on Cursor for large-scale or batch-oriented transformations.

Observations

- Performance: Brokk was significantly faster, with approximately 30 times the throughput.

- Scalability: Cursor appeared to slow down as it progressed, suggesting performance degradation over larger codebases.

- Project Awareness: Brokk demonstrated a stronger understanding of the code structure and nullability context, making fewer redundant or questionable annotations.

- Cost Effectiveness: Brokk delivered better results using a lower-cost model, while Cursor required premium infrastructure just to make partial progress.

Conclusion

This test illustrates Brokk’s strength in handling large-scale, systematic code transformations with minimal overhead. While Cursor may still be useful for smaller tasks or interactive refactoring, Brokk’s performance in this evaluation illustrates its potential as a more scalable and cost-effective solution for batch-oriented development tasks, particularly those involving annotation and static analysis preparation.

See It For Yourself

Curious to watch the test in action? We've recorded the full session so you can see exactly how each tool performed in real time.

Brokk's Video

Brokk's test

Cursor's Video

Cursor's test