The Best Open Weights Coding Models of 2025

DeepSeek just released what will probably be the last major open coding model of 2025, so it's a good time to reflect and take stock of where we're at. (All numbers are from the Brokk Power Ranking.)

Local models: almost good enough to be interesting

We see people reporting 150 tokens/s from Qwen 3 Coder on an RTX 5090 and over 200 tps from GPT OSS 20B. That's roughly twice as fast as Sonnet!

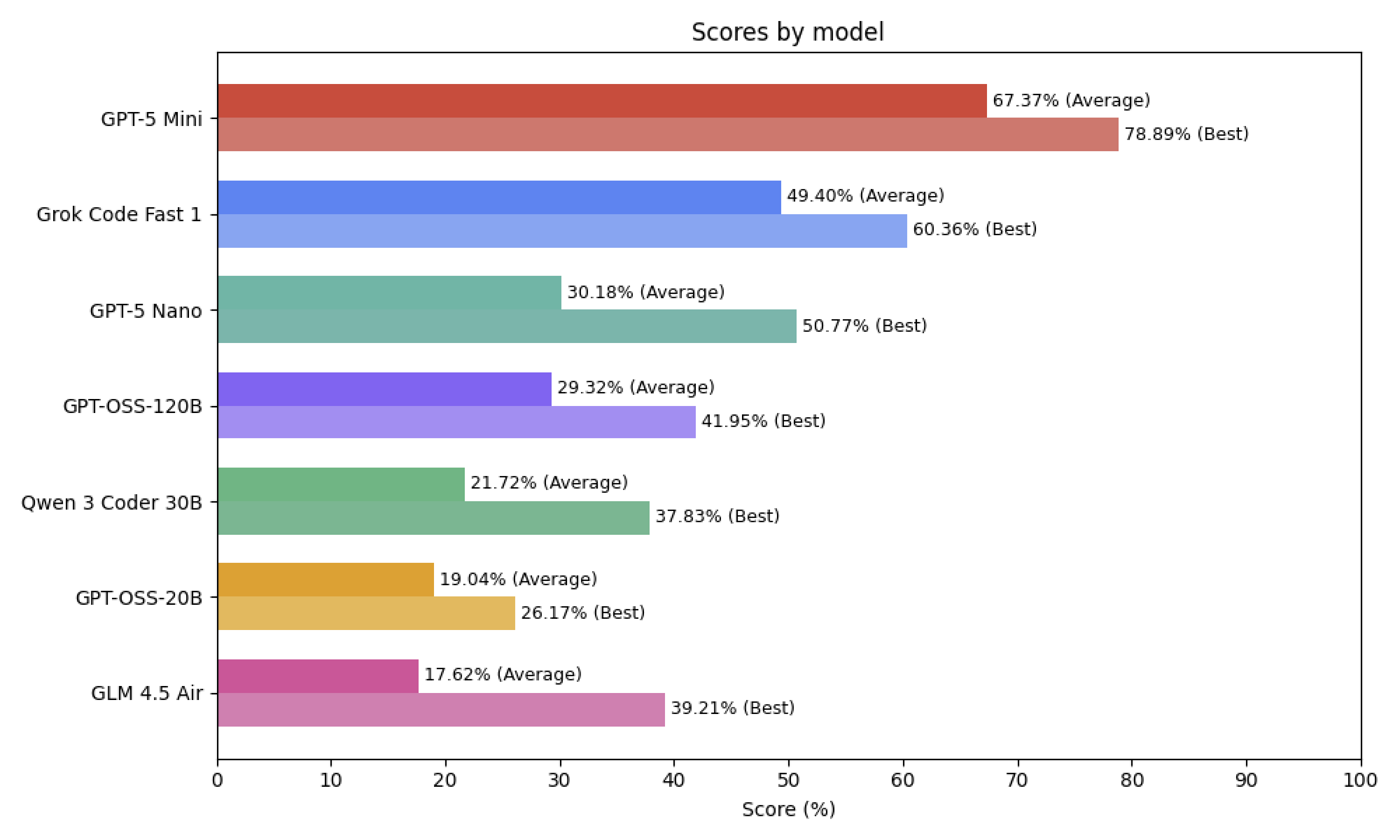

But models this small give up a lot of intelligence. Here's how the best local-sized models stack up against budget champs GPT 5-Mini and Grok Code Fast 1, as well as GPT 5-Nano:

So if your use case is solved by a model 2/3 as smart as GPT-5 Nano, then you're in luck! The rest of us will have to keep waiting.

For top models, the closed-to-open gap is six months

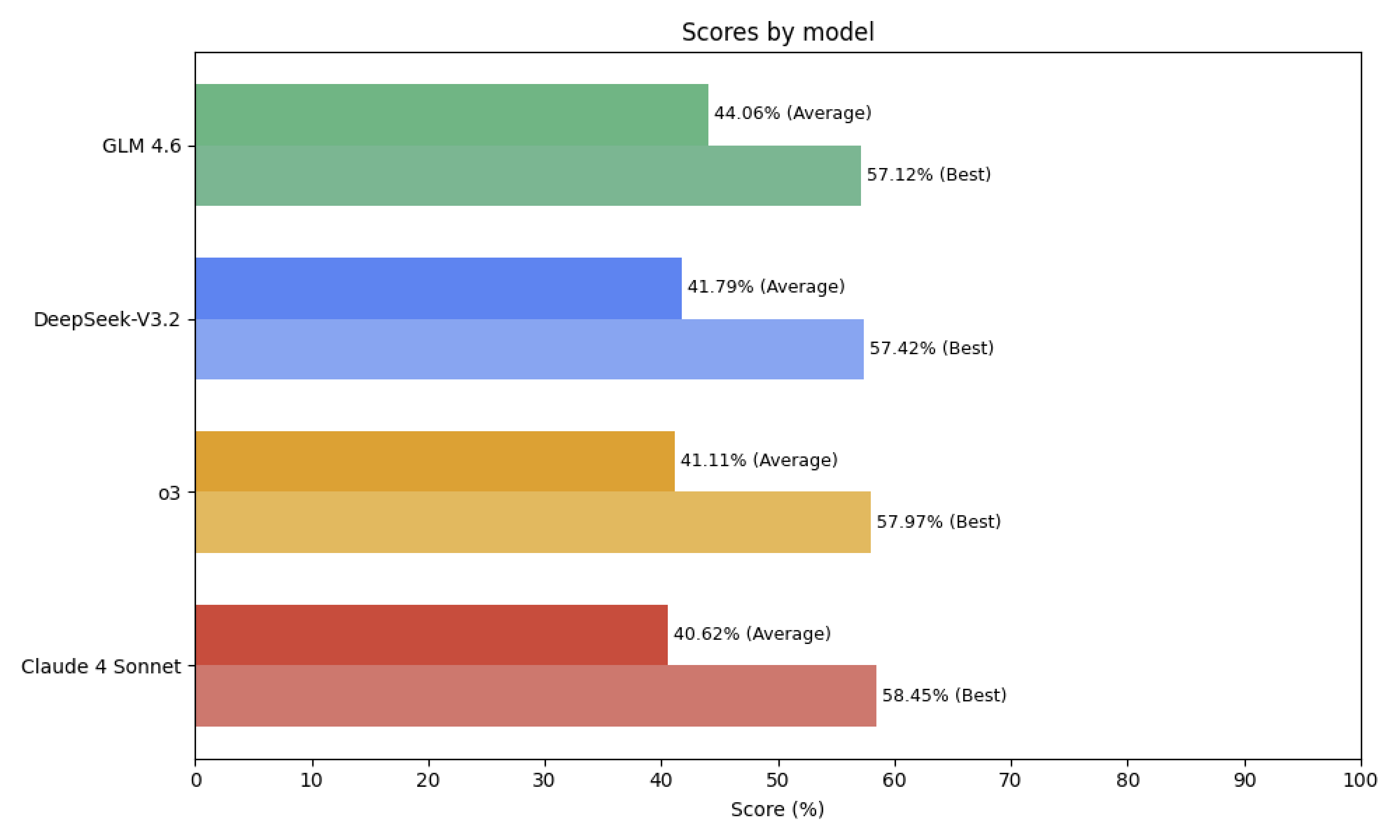

The best (full-sized) open-weights models are only about six months behind the top closed models, with GLM 4.6 (September) and DeepSeek-V3.2 (December) matching the performance of o3 (April) and Sonnet 4 (May):

And GLM 4.6 and DeepSeek-V3.2 are both significantly less expensive than even the lightweight models from the big labs. (GPT 5-Mini, which is an amazing bargain, comes close.)

Speed is the final boss for open models

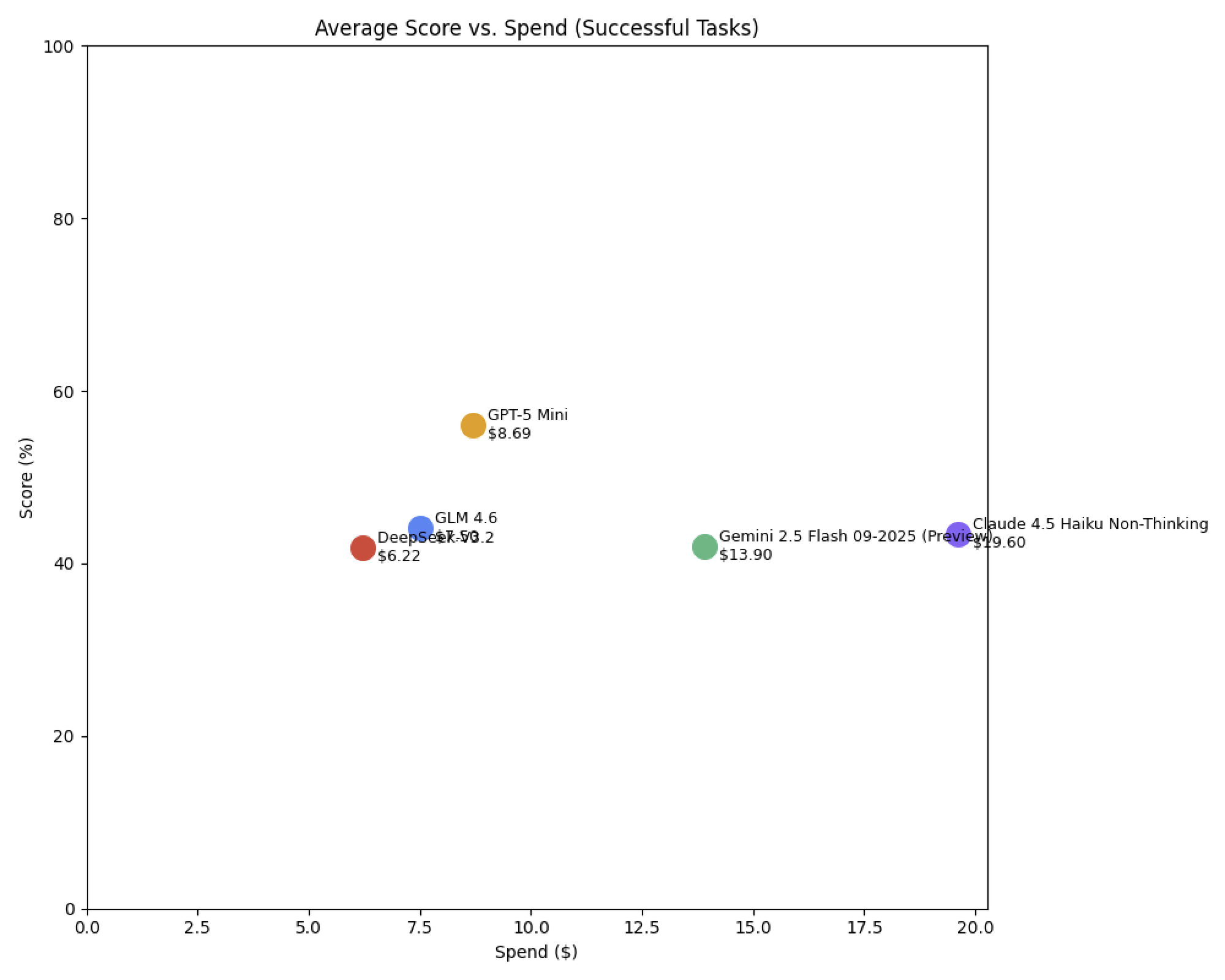

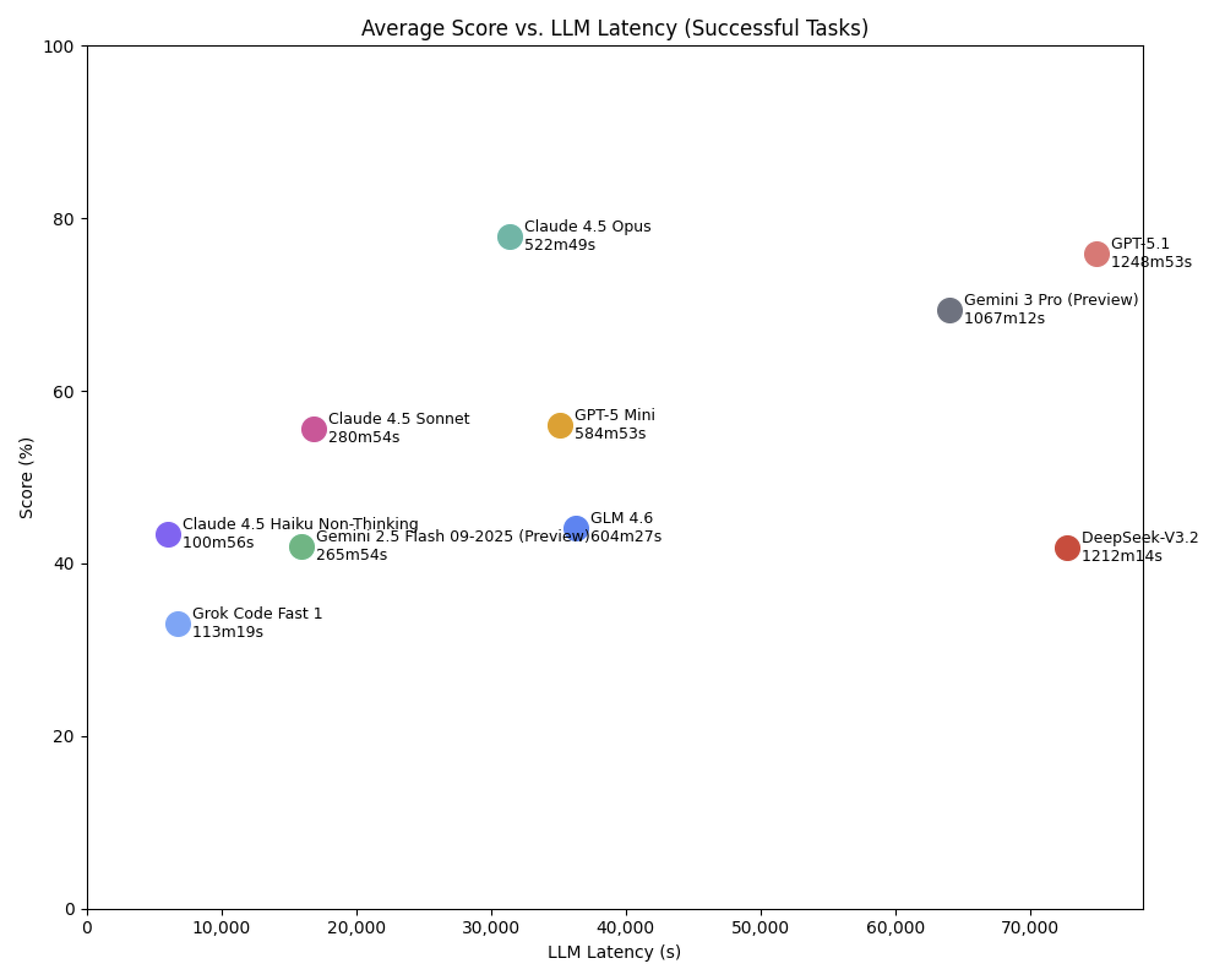

The bad news is that open models are still much slower than closed-lab models at the same level of intelligence. Here we show both the large and lightweight closed models:

It's impossible to say what the dominant factors are here, but it's certainly some combination of

- The closed models are smaller.

- The closed models have better-optimized inference infrastructure.

- The open labs don't have enough capacity to keep up with demand.

Looking ahead

If open labs can maintain or narrow their six-month gap in pure intelligence, their next great hurdle is efficiency. We’ve already seen closed labs successfully pivot to speed-optimized architectures this year, most notably in Anthropic’s Haiku 4.5 and xAI’s Grok Code Fast 1. The open labs are now under pressure to follow that same roadmap. If they succeed, 2026 won't just bring smarter open models, it will finally bring open models fast enough to compete with the cloud.